Prompt Engineering 101: Getting the Best out of Gen AI for Product Managers

Let's think step by step—how can we get the best out of Gen AI systems?

One of the most exciting and transformative aspects of generative artificial intelligence (gen AI) systems is that they can be used by anyone. Sure, building gen AI models and tools still requires specialist knowledge, but as ChatGPT has shown, actually using them is as simple as having a conversation. On the face of it, this may seem like a subtle distinction to make, but the implications are huge. Think about it… you can interact with some of the most sophisticated technology ever invented just by using everyday natural language.

The use of natural language in how we “prompt” gen AI models mask a brilliant level of complexity and wraps these new tools up into easily adoptable products. No wonder ChatGPT was the fastest-growing app of all time. However, when we can get answers to any question with only a few words we can be both delighted and disappointed. After running over a dozen gen AI workshops I have now seen how hundreds of people typically use tools like ChatGPT and I believe the low barrier to entry is also stopping people from fully realising the potential of these systems.

Prompt engineering is a term that is often thrown around, and while I don’t like how pretentious it sounds, it is the best description for a set of techniques that can maximise the quality of outputs from gen AI tools. As I start to write in-depth use cases for gen AI tools I would highly recommend you become familiar with a few key prompt engineering techniques, such as the one we’ll go through today.

Generative AI Outputs are Non-Deterministic

When I talk to people about the non-deterministic nature of gen AI tools, their eyes usually gloss over. It’s an important point to understand, but I’ll keep this brief.

Whenever you prompt ChatGPT or another gen AI tool, it could return anything. It is trying to predict the next word or form a picture on a screen that matches your description. There are near-infinite possible things it could give back to you. Since the amount of possible responses is nearly infinite, the amount of useless junk that could be generated is nearly infinite, too. The number of responses that are actually valuable is in the minority.

Put simply, our job when prompting gen AI tools is to be specific with what types of responses we are expecting. By doing this, more often than not, we can ensure our conversations with gen AI tools falls in the “valuable” space instead of the “junk” space.

Chain of Thought

Let’s say I walked up to you at your desk and said: “hey, can you make a one-page list of ideas for expanding my product into new markets?”. How would you respond? Well, a human will typically break this large and ambiguous task down into multiple steps like:

What is your product?

What value does it provide?

Who are your customers?

Where are the untapped markets?

What are your competitors doing?

And so on. After going through all of these tasks you will finally put pen to paper and come up with some ideas, using all of the context you’ve ingested through this process combined with your pre-existing knowledge.

In carrying out this task we take for granted that a single request actually requires multiple sub-steps to complete. As humans, we intuitively understand this process and can handle the ambiguity.

But what about current gen AI chatbots? Well, this is where I see a lot of (unjustified) criticism of tools like ChatGPT coming from. People go into a chatbot conversation with a large and ambiguous task like “come up with a list of ideas” and then complain when the results are uninspired or inaccurate. What is (usually) happening is that the prompt given was vague and uninspired, so it follows that the response will be relatively vague and uninspired as well!

Let’s Think Step by Step

At a fundamental level, large language models are just trying to predict the best possible next set of words based on any prompt you provide. Help your AI companion along by giving them space to “think” before answering.

You can improve the robustness of your gen AI conversations right now with only five words: Let’s think step by step.

When testing for robustness in ChatGPT responses, researchers found that adding “Let’s think step by step” in a prompt improved accuracy from 17.7% to 78.7%

Source: Large Language Models are Zero-Shot Reasoners (https://arxiv.org/pdf/2205.11916.pdf)

Intuitively, it makes sense. Going back to our earlier example, if I forced you to give me a list of ideas before you had a chance to think about it, your ideas may be bland and uninspired too.

Putting Theory Into Practice

There are plenty of academic examples online that show how adding phrases like “Let’s think step by step” improves quality on reasoning and arithmetic tasks but let me bring everything together and walk you through how you can apply the principles of Chain of Thought (CoT) to everyday product tasks.

Let's revisit that example from earlier one last time. Imagine my product is a banking app and I need creative ideas to take the product forward.

Mistake #1: You Immediately Ask for the Output

I often see people treat gen AI like Google Search: they prompt for the answer. While this isn’t bad, if you have the time for it, you can get significantly improved outputs with a tweak to your approach.

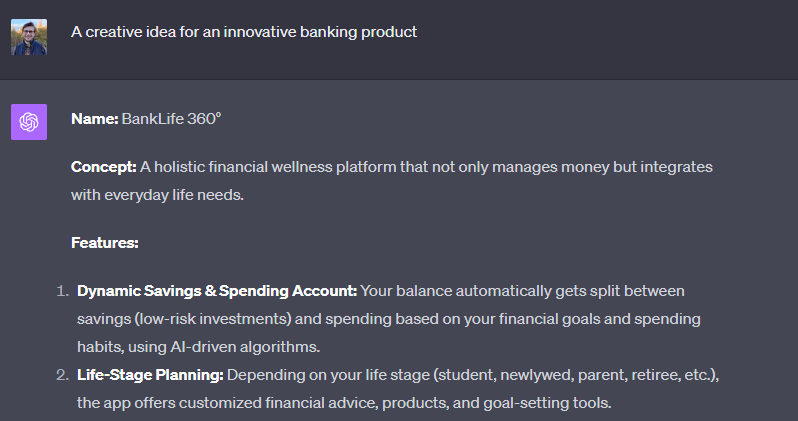

Here’s a simple prompt “A creative idea for an innovative banking product”:

The ideas here aren’t bad, but they are also very broad, unrelated, and would be unlikely to woo a bank’s proposition team, let alone secure serious project sponsorship.

Try this instead:

Start by introducing your task to the chatbot. Don’t ask for the final output yet! We are trying to build up some context for the final answer. Treat ChatGPT like a team member you are onboarding to this project.

Now that we have some clear context and goals, when we ask ChatGPT essentially the exact same question, the quality of the output is significantly improved:

Mistake #2: You Don’t Provide Examples

Once you begin ideating with your AI companion it can be easy to fall back into the trap of asking broad questions and being disappointed with broad answers. One easy way to counteract this tendency is to provide examples of your desired output.

Let’s say we want to start ideating on FutureSaver. I can ask ChatGPT for some user stories:

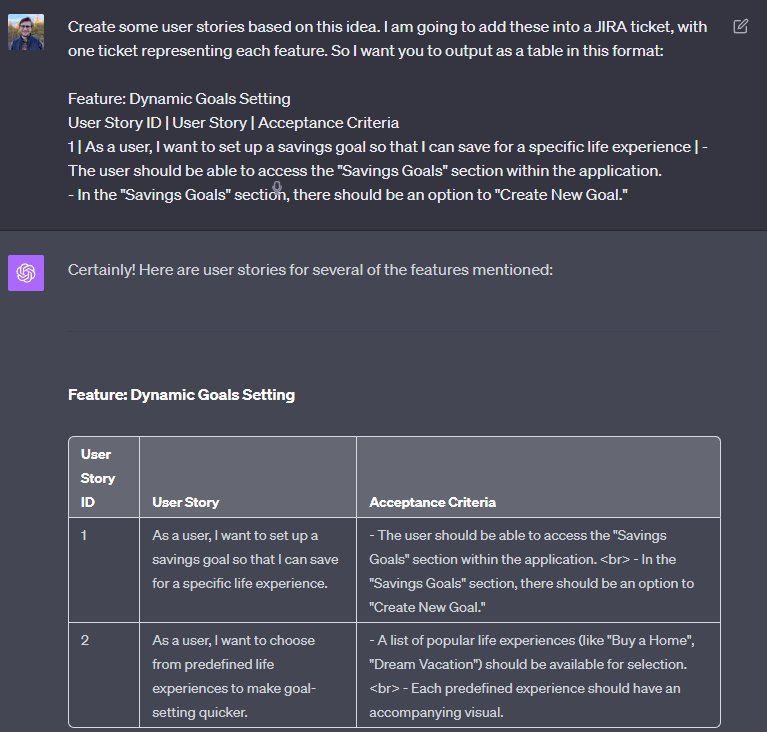

The user stories are great, but if I were working with a BA we probably wouldn’t create a page-long list of stories and instead opt for a table that plugs right into Jira. So, I’ll change my prompt to include an example of what I expect:

I timed this. It took me 3.5 minutes to write this prompt. Yes, more overhead than a simple “create some user stories” prompt, but ChatGPT came back with a full markdown table split out by feature, with a list of user stories and acceptance criteria that can be copy/pasted into Jira immediately. Getting the same ideas down on paper and then into a format compatible with Jira would typically take around an hour.

Limitations & Considerations

Despite our best intentions, even with some prompt engineering, AI isn’t perfect. I would almost never directly use an AI output on a real product without at least going through manual validation and tweaks. However, this doesn’t mean it isn’t useful. You can use AI outputs to inspire you, kickstart your thinking, or transform something you’ve already created into something new.

When it comes to prompt engineering a little bit of extra time can substantially increase quality, but it isn’t the be-all and end-all. If you just want to quickly ideate or get a rough draft together, you can probably get away with simple, basic prompts. It is probably more time-efficient. However, if you want robust, creative, and innovative ideas from the start, a little bit of prompting can go a long way.

Summary:

Through two examples we have been able to show multiple concepts in action that can easily—and substantially—improve output quality:

Treat AI as a colleague. Give them the context of the conversation before jumping into solution mode.

Think step by step and ask ChatGPT to devise some steps to solve the problem first (or better yet, give ChatGPT the steps you want it to take!).

Tell ChatGPT to assume a role/persona.

Provide examples of the outputs you are expecting. Don’t take for granted that ChatGPT knows what you want.

Gen AI is non-deterministic. Every response will be different and it is our job to help steer conversations towards valuable outputs.

There's a wealth of techniques out there designed to extract even more from gen AI tools. While I plan to explore and detail these techniques in future posts, for now, I'd like to emphasize that by simply adhering to these principles, you'll already find yourself at an advantage, likely surpassing the understanding and application of at least 80% of your peers.

I am publishing weekly articles on “using AI” and “building AI” products here and on my Medium. If you subscribe here you will get exclusive access to all articles one week early, for free. And if you think any of your friends or colleagues would benefit from my content I would love it if you could share this post.